Problem Statement

Diabetic retinopathy affects over 93 million people globally and is a leading cause of preventable blindness. Early-stage detection from fundus images requires expert ophthalmologists, creating a bottleneck in underserved regions. Automated classification could dramatically improve screening throughput.

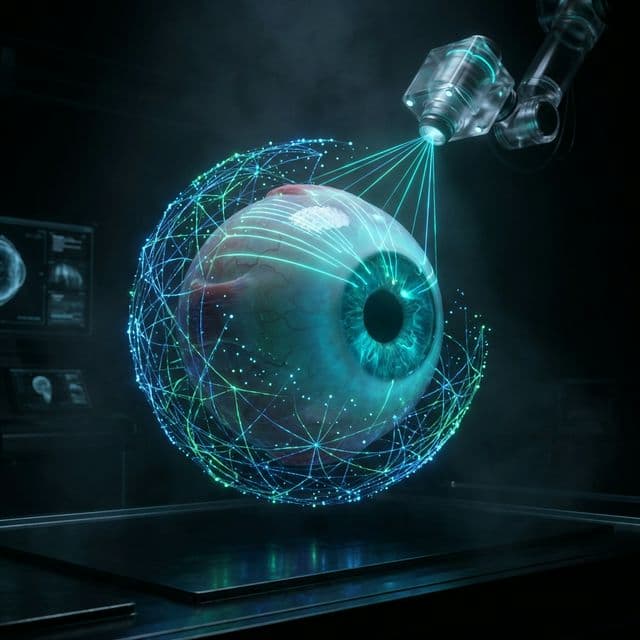

Solution Architecture

Built a comparative classification pipeline evaluating four architectures: (1) Custom CNN baseline; (2) Vision Transformer (ViT) with patch embeddings; (3) Swin Transformer with shifted window attention; (4) YOLOv8-N adapted for classification. Each model was trained on a curated fundus image dataset with standardized preprocessing, augmentation, and evaluation protocols.

Tech Stack

Python, PyTorch, torchvision, timm (pretrained models), OpenCV, Albumentations (augmentation), Weights & Biases (experiment tracking), Matplotlib, scikit-learn.

Results & Metrics

Achieved 95% classification accuracy with the Swin Transformer architecture, outperforming the CNN baseline (82%), ViT (89%), and YOLOv8-N (91%). The model demonstrated strong generalization across unseen test data with balanced performance across all disease classes. Results published as IEEE paper at OCIT conference.

Challenges & Learnings

Class imbalance in fundus image datasets required sophisticated augmentation strategies. Training Vision Transformers on limited data required careful transfer learning from ImageNet-21k pretrained weights. Inference speed optimization for potential edge deployment in clinical settings required architecture pruning.